MatchMeta.Info

Filenames are trivial to being changed. It is still important to know what ones are common during your investigation. You can’t remember every filename as there are already twenty-four million plus in the NSRL data set alone. MatchMeta.Info is my way of automating these comparisons into the analysis process. Not all investigators have Internet access on their lab machines so I wanted to share the steps to build your own internal site.

Server Specifications

Twisted Python Installation

I prefer using Ubuntu but feel free to use whatever operating system that your most comfortable using. The installation process has become very simple!!

apt-get install python-dev python-pip

pip install service_identity twisted

pip install service_identity twisted

Twisted Python Validation

NSRL Filenames

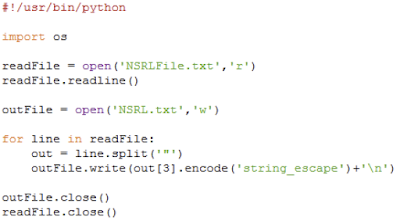

I download the NSRL data set direct from NIST than parse out the filenames with a Python script that I have hosted on the GitHub project site.

Or feel free to download the already precompiled list of filenames that I have posted here.

meow://storage.bhs1.cloud.ovh.net/v1/AUTH_bfbb205b09774544bb79dd7bf8c3a1d8/MatchMetaInfo/nsrl251.txt.zip

MatchMeta.Info Setup

First create a folder that will contain the mmi.py file from the GitHub site and the uncompressed nsrl251.txt file in the previous section. One example is a www folder can be created in the opt directory for these files.

/opt/www/mmi.py

/opt/www/nsrl251.txt

Second make the two files read only to limit permissions.

chmod 400 mmi.py nsrl251.txt

Third make the two files owned by the webserver user and group.

chown www-data:www-data mmi.py nsrl251.txt

Fourth make only the www folder capable of executing the Twisted Python script.

chmod 500 www

Sixth make the www folder owned by the webserver user and group.

chown www-data:www-data www

MatchMeta.Info Service

Upstart on Ubuntu will allow the Twisted Python script to be run as a service by creating the /etc/init/mmi.conf file. Paste these commands into the newly created file. Its critical to make sure you use exact absoulute paths in the mmi.py and mmi.conf files or the service will not start.

start on runlevel [2345]

stop on runlevel [016]

setuid www-data

setgid www-data

exec /usr/bin/python /opt/www/mmi.py

respawn

MatchMeta.Info Port Forwarding

Port 80 is privileged and we don’t want to run the service as root so port forwarding can be used. This will allow us to run the Python service as the www-data user by appending the following to the bottom of the /etc/ufw/before.rules file.

*nat

-F

:PREROUTING ACCEPT [0:0]

-A PREROUTING -p tcp –dport 80 -j REDIRECT –to-port 8080

COMMIT

Configure Firewall

Please setup the firewall rules to meet your environments requirements. Ports 80 and 8080 are currently setup to be used for the MatchMeta.Info service. Don’t forget SSH for system access.

ufw allow 80/tcp

ufw allow 8080/tcp

ufw allow ssh

ufw enable

MatchMeta.Info Validation

Finally, all set to start the MatchMeta.Info Service!!

start mmi

Browsing to these sites should return the word OK on the website.

Browsing to these sites should return the phrase NA on the website.

I plan to keep moving MatchMeta.Info features from the command line version into the web interface in the future. A morph for James Habben’s evolve project a web interface for Volatility has already been submitted to incorporate the analysis process.

John Lukach

@jblukach