Malware Training Sets: A machine learning dataset for everyone

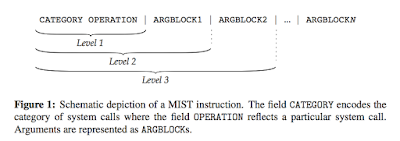

- Extracting features directly from samples. This is the easiest solution since the possible extracted features would be directly related to the sample such as (but not limited to): file “sections”, “entropy”, “Syscalls” and decompiled assembly n-grams.

- Extracting features on samples analysis. This is the hardest solution since it would include both static analysis such as (but not limited to): file sections, entropy, “Syscall/API” and dynamic analysis such as (but not limited to): “Contacted IP”, “DNS Queries”, “execution processes”, “AV signatures” , etc. etc. Plus I needed a complex system of dynamic analysis including multiple sandboxes and static analysers.

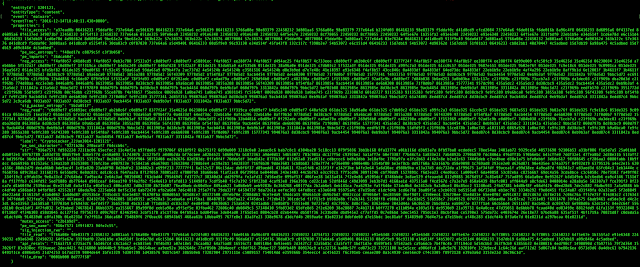

It means the category is sig (stands for signature) and the action is “antimalware_metascan”. The evidences are empty by meaning no signature found from metascan (in such a case).

“sig_antivirus_virustotal”: “ffebfdb8 9dbdd699 600fe39f 45036f7d 9a72943b”

It means the signature virus_total found 5 evidences (ffebfdb8 9dbdd699 600fe39f 45036f7d 9a72943b).

A fundamental property is the “label” property which classifies the malware family. I decided to name this field “label” rather than: “malware_name”, “malware_family” or “classification” in order to let the compatibility with many implemented machine learning algorithms which use the field “label” to properly work (it seems to be a defacto standards for many engine implementations).

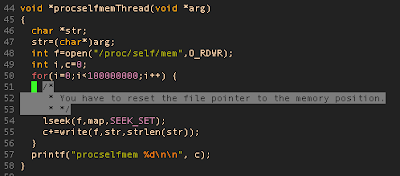

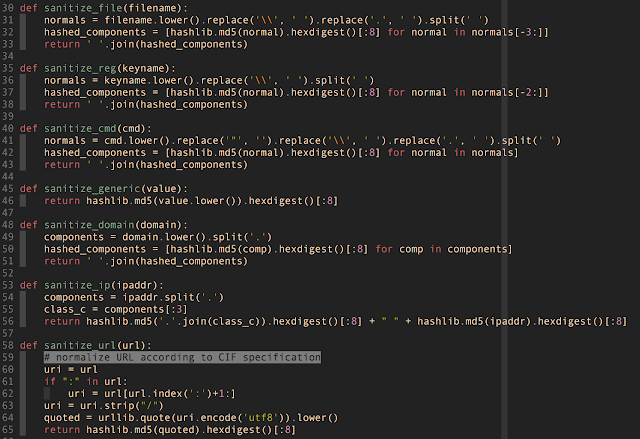

Sanitization Procedures

|

| Sanitization Procedures (click to enlarge) |

Training DataSets Generation: The Simplified Process

Data Samples

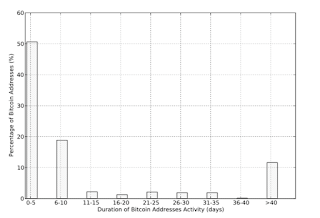

Today (please refers to blog post date) the collected classified datasets is composed by the following samples:

- APT1 292 Samples

- Crypto 2024 Samples

- Locker 434 Samples

- Zeus 2014 Samples

I will definitely process the samples and build new datasets to share to everybody.

Where can I download the training datasets ? HERE

Available Features and Frequency

List of current available features with occurrences counter. :

‘file_access’: 138759,

‘sig_infostealer_ftp’: 13114,

‘sig_modifies_hostfile’: 5,

‘sig_removes_zoneid_ads’: 16,

‘sig_disables_uac’: 33,

‘sig_static_versioninfo_anomaly’: 0,

‘sig_stealth_webhistory’: 417,

‘reg_write’: 11942,

‘sig_network_cnc_http’: 132,

‘api_resolv’: 954690,

‘sig_stealth_network’: 71,

‘sig_antivm_generic_bios’: 6,

‘sig_polymorphic’: 705,

‘sig_antivm_generic_disk’: 7,

‘sig_antivm_vpc_keys’: 0,

‘sig_antivm_xen_keys’: 5,

‘sig_creates_largekey’: 16,

‘sig_exec_crash’: 6,

‘sig_antisandbox_sboxie_libs’: 144,

‘sig_mimics_icon’: 2,

‘sig_stealth_hidden_extension’: 9,

‘sig_modify_proxy’: 384,

‘sig_office_security’: 20,

‘sig_bypass_firewall’: 29,

‘sig_encrypted_ioc’: 476,

‘sig_dropper’: 671,

‘reg_delete’: 2545,

‘sig_critical_process’: 3,

‘service_start’: 312,

‘net_dns’: 486,

‘sig_ransomware_files’: 5,

‘sig_virus’: 781,

‘file_write’: 20218,

‘sig_antisandbox_suspend’: 2,

‘sig_sniffer_winpcap’: 16,

‘sig_antisandbox_cuckoocrash’: 11,

‘file_delete’: 5405,

‘sig_antivm_vmware_devices’: 1,

‘sig_ransomware_recyclebin’: 0,

‘sig_infostealer_keylog’: 44,

‘sig_clamav’: 1350,

‘sig_packer_vmprotect’: 1,

‘sig_antisandbox_productid’: 18,

‘sig_persistence_service’: 5,

‘sig_antivm_generic_diskreg’: 162,

‘sig_recon_checkip’: 4,

‘sig_ransomware_extensions’: 4,

‘sig_network_bind’: 190,

‘sig_antivirus_virustotal’: 175975,

‘sig_recon_beacon’: 23,

‘sig_deletes_shadow_copies’: 24,

‘sig_browser_security’: 216,

‘sig_modifies_desktop_wallpaper’: 83,

‘sig_network_torgateway’: 1,

‘sig_ransomware_file_modifications’: 23,

‘sig_antivm_vbox_files’: 7,

‘sig_static_pe_anomaly’: 2194,

‘sig_copies_self’: 591,

‘sig_antianalysis_detectfile’: 51,

‘sig_antidbg_devices’: 6,

‘file_drop’: 6627,

‘sig_driver_load’: 72,

‘sig_antimalware_metascan’: 1045,

‘sig_modifies_certs’: 46,

‘sig_antivm_vpc_files’: 0,

‘sig_stealth_file’: 1566,

‘sig_mimics_agent’: 131,

‘sig_disables_windows_defender’: 3,

‘sig_ransomware_message’: 10,

‘sig_network_http’: 216,

‘sig_injection_runpe’: 474,

‘sig_antidbg_windows’: 455,

‘sig_antisandbox_sleep’: 271,

‘sig_stealth_hiddenreg’: 13,

‘sig_disables_browser_warn’: 20,

‘sig_antivm_vmware_files’: 6,

‘sig_infostealer_mail’: 617,

‘sig_ipc_namedpipe’: 13,

‘sig_persistence_autorun’: 2355,

‘sig_stealth_hide_notifications’: 19,

‘service_create’: 62,

‘sig_reads_self’: 14460,

‘mutex_access’: 15017,

‘sig_antiav_detectreg’: 4,

‘sig_antivm_vbox_libs’: 0,

‘sig_antisandbox_sunbelt_libs’: 2,

‘sig_antiav_detectfile’: 2,

‘reg_access’: 774910,

‘sig_stealth_timeout’: 1024,

‘sig_antivm_vbox_keys’: 0,

‘sig_persistence_ads’: 3,

‘sig_mimics_filetime’: 3459,

‘sig_banker_zeus_url’: 1,

‘sig_origin_langid’: 71,

‘sig_antiemu_wine_reg’: 1,

‘sig_process_needed’: 137,

‘sig_antisandbox_restart’: 24,

‘sig_recon_programs’: 5318,

‘str’: 1443775,

‘sig_antisandbox_unhook’: 1364,

‘sig_antiav_servicestop’: 78,

‘sig_injection_createremotethread’: 311,

‘pe_imports’: 301256,

‘sig_process_interest’: 295,

‘sig_bootkit’: 25,

‘reg_read’: 458477,

‘sig_stealth_window’: 1267,

‘sig_downloader_cabby’: 50,

‘sig_multiple_useragents’: 101,

‘pe_sec_character’: 22180,

‘sig_disables_windowsupdate’: 0,

‘sig_antivm_generic_system’: 6,

‘cmd_exec’: 2842,

‘net_con’: 406,

‘sig_bcdedit_command’: 14,

‘pe_sec_entropy’: 22180,

‘pe_sec_name’: 22180,

‘sig_creates_nullvalue’: 1,

‘sig_packer_entropy’: 3603,

‘sig_packer_upx’: 1210,

‘sig_disables_system_restore’: 6,

‘sig_ransomware_radamant’: 0,

‘sig_infostealer_browser’: 7,

‘sig_injection_rwx’: 3613,

‘sig_deletes_self’: 600,

‘file_read’: 50632,

‘sig_fraudguard_threat_intel_api’: 226,

‘sig_deepfreeze_mutex’: 1,

‘sig_modify_uac_prompt’: 1,

‘sig_api_spamming’: 251,

‘sig_modify_security_center_warnings’: 18,

‘sig_antivm_generic_disk_setupapi’: 25,

‘sig_pony_behavior’: 159,

‘sig_banker_zeus_mutex’: 442,

‘net_http’: 223,

‘sig_dridex_behavior’: 0,

‘sig_internet_dropper’: 3,

‘sig_cryptAM’: 0,

‘sig_recon_fingerprint’: 305,

‘sig_antivm_vmware_keys’: 0,

‘sig_infostealer_bitcoin’: 207,

‘sig_antiemu_wine_func’: 0,

‘sig_rat_spynet’: 3,

‘sig_origin_resource_langid’: 2255

Cite The DataSet

If you find those results useful please cite them :

@misc{ MR,

author = "Marco Ramilli",

title = "Malware Training Sets: a machine learning dataset for everyone",

year = "2016",

url = "http://marcoramilli.blogspot.it/2016/12/malware-training-sets-machine-learning.html",

note = "[Online; December 2016]"

}

Continue reading Malware Training Sets: A machine learning dataset for everyone